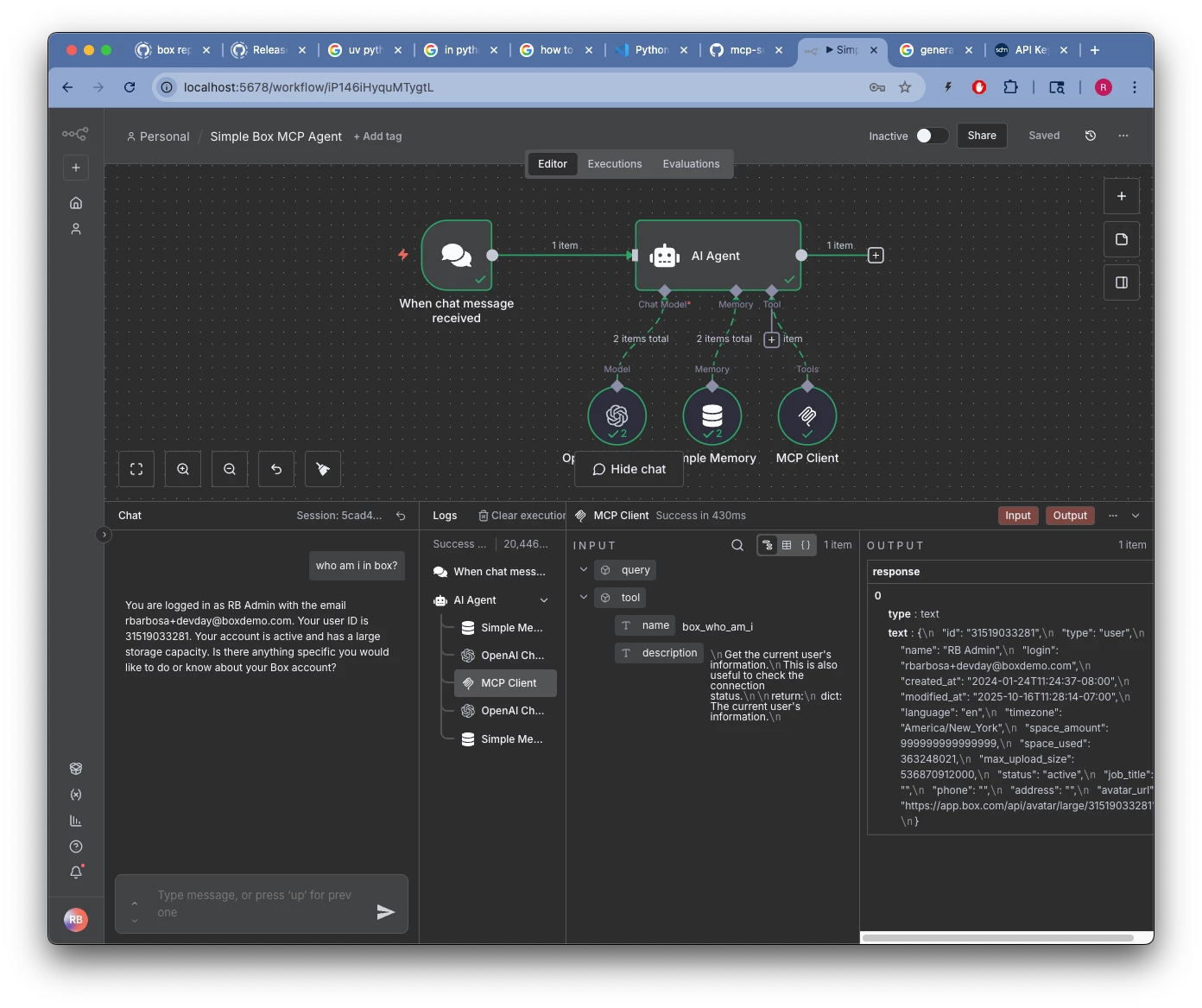

I read the below aritcle. It’s awesome!!

https://blog.box.com/box-community-mcp-server-updates-you-didnt-know-you-needed

I have two questions:

- the authentication parameter is --box-auth, not --auth, right?

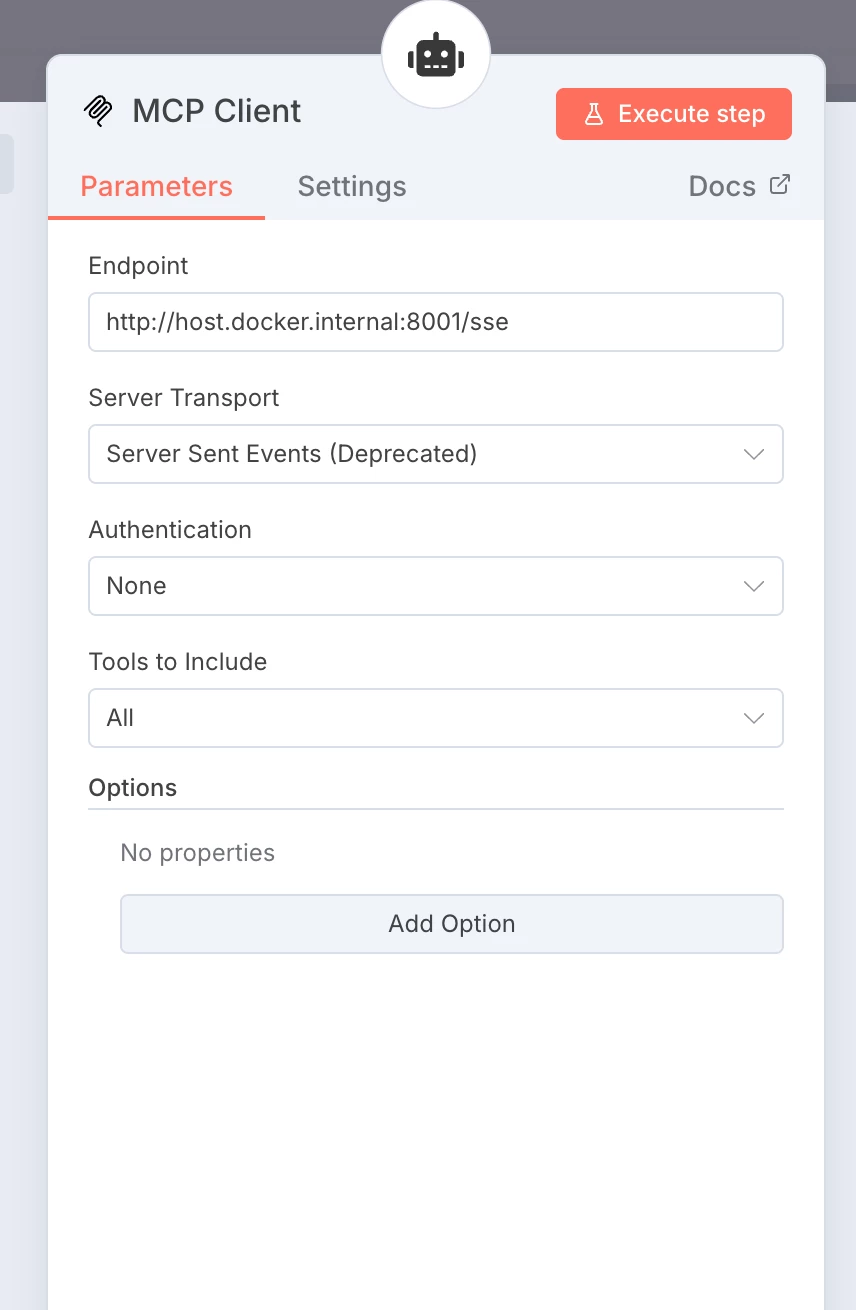

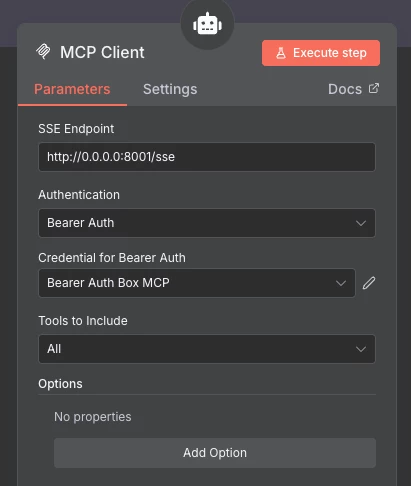

- I can’t connect n8n MCP Client that’s endpoint is set to http://0.0.0.0:8001/sse. I need set it to http:host.docker.internal:8001/sse. do you use a local docker and a local mcp server or not?

- If I set Authentication parameter “None”, the MCP Client can’t connect local MCP Server. the local MCP server displays the below error:

- WARNING:middleware:No token configured, rejecting all requests

INFO: 127.0.0.1:53381 - "GET /sse HTTP/1.1" 401 Unauthorized

- WARNING:middleware:No token configured, rejecting all requests